How I Over-Engineered the ASP.NET Health Monitoring Feature

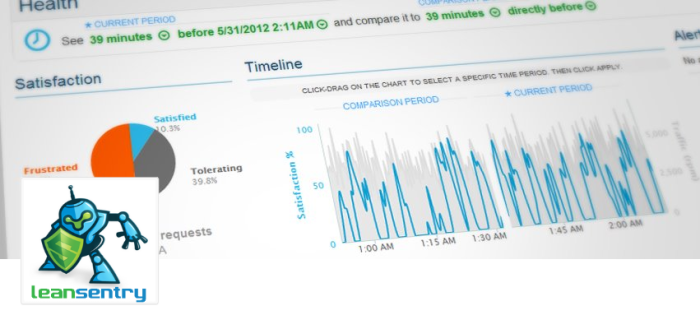

ASP.NET Health Monitoring was one of the major features I worked on for the ASP.NET 2.0 release. Fast forward 8 years later, after releasing ASP.NET, IIS7, and building LeanSentry. This is the story of this feature, lessons learned while building it, and a practical take on when to use/not to use Health Monitoring for monitoring your ASP.NET applications.